Frequently asked questions

What does the software platform do?

The platform is used to train, benchmark, and deploy Logic-Based Network (LBN) models. It takes care of data preparation, model training, optimisation, and evaluation, then produces deployable models ready for use in production systems. The goal is to remove the operational and technical friction typically associated with building and deploying AI.

Can the software train generative AI models?

Not yet. The platform is currently focussed on deterministic LBNs rather than generative models. This reflects a deliberate focus on reliability, efficiency, and explainability for industrial and operational use cases. Capabilities continue to expand over time; to stay informed as new model types are introduced, you can subscribe to the newsletter.

What types of data does the platform support?

The platform currently supports structured datasets provided in CSV format, including time-series, sensor, and tabular data. These data types are common across industrial, operational, and decision intelligence applications and align well with the strengths of LBNs. Support for additional formats will be added over time.

How experienced at AI does the platform user need to be?

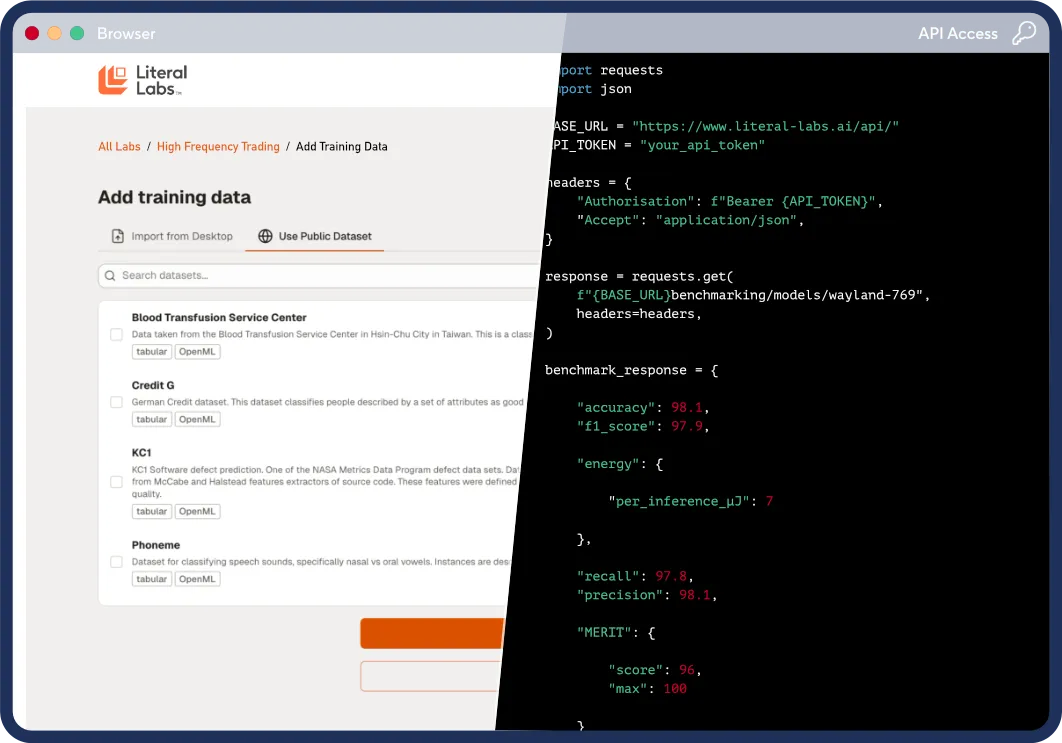

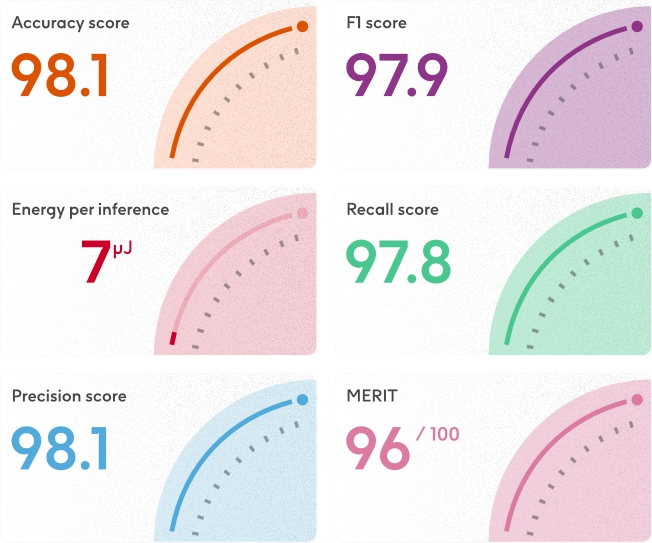

Very little prior AI experience is required. The platform provides a guided, browser-based interface that allows users to train models with minimal configuration. For more technical teams, an API is available to enable deeper control, automation, and integration into existing workflows, but this is optional rather than required.

Where can the platform deploy LBNs to?

Models trained by the platform can be deployed across a wide range of environments, from embedded and edge devices through to on-premise servers and cloud infrastructure. The platform is designed to support real-world deployment scenarios rather than limiting models to research or hosted inference environments.

What hardware is it compatible with for deployment?

LBNs produced by the platform are hardware-agnostic. They can run efficiently on microcontrollers, standard CPUs, and server-class hardware without requiring GPUs or specialised accelerators. This makes deployment possible in resource-constrained environments as well as at scale.

Does the platform manage training infrastructure?

Yes. Training is handled on managed infrastructure, so users do not need to provision, maintain, or optimise their own training hardware. This reduces operational overhead and allows teams to focus on data, use cases, and outcomes rather than infrastructure.

How does the platform fit into existing systems?

The platform is designed to integrate cleanly with existing data pipelines and production systems. Trained models can be exported and embedded directly into applications, devices, or services, while the API enables automation and integration into CI/CD or MLOps-style workflows.