History of the Tsetlin Machine

At the heart of Literal Labs training tool for logic-based AI models sits something extraordinary: the Tsetlin Machine. This powerful yet elegantly simple machine learning architecture enables our models to deliver state-of-the-art speed and ultra-low power consumption all the while being naturally interpretabile.

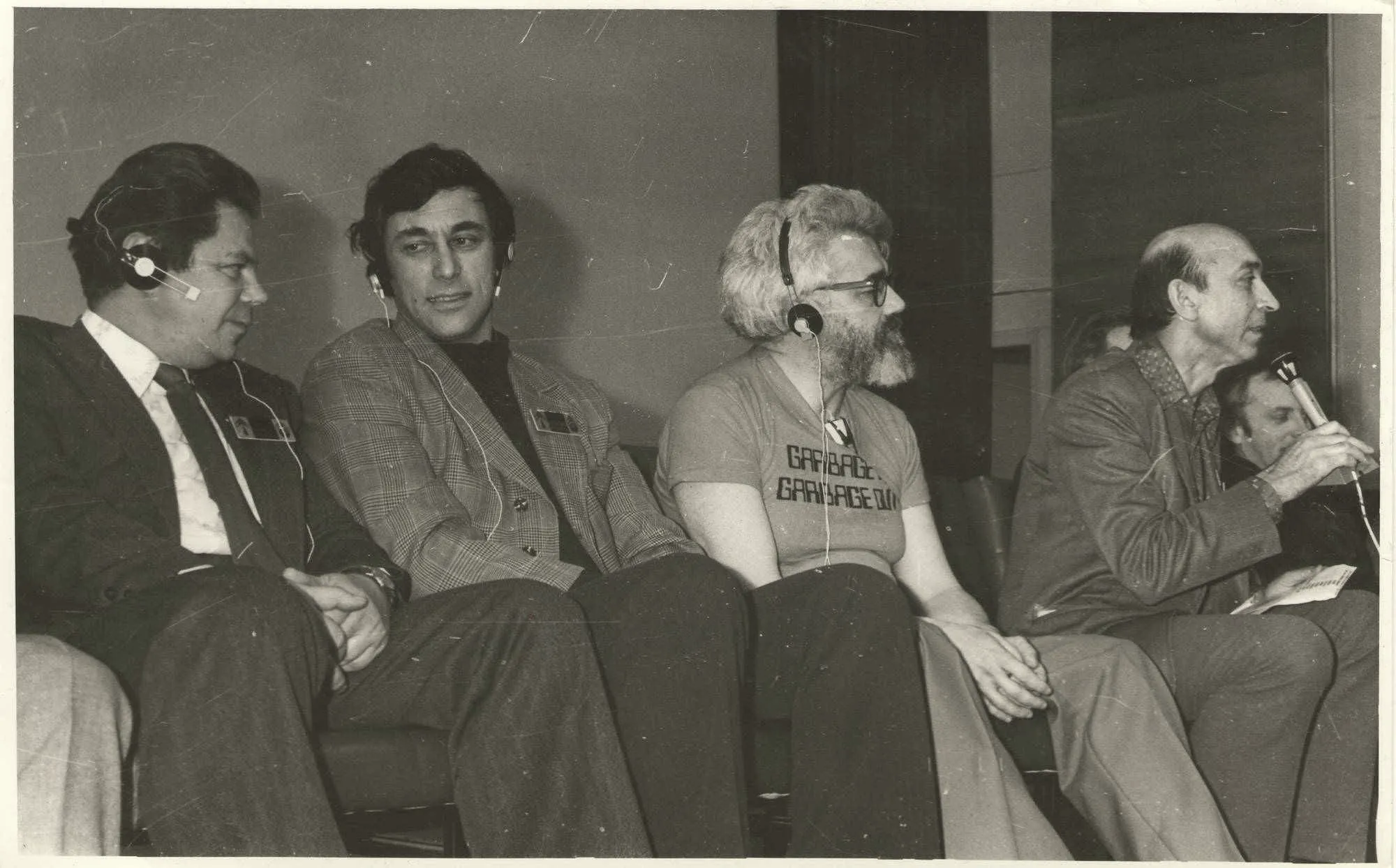

The Tsetlin Machine is not just another machine learning algorithm. It draws on principles laid down by Mikhail Tsetlin, a visionary Soviet mathematician, who in the 1960s explored a radically different path to artificial intelligence. Rather than mimicking biological neurons, Tsetlin’s approach was rooted in learning automata and game theory. He recognised that logic—expressed through what we now call Tsetlin automata—could classify data more efficiently, forging a new direction in AI.

Together with Victor Varshavsky, advisor to Literal Labs' co-founder Alex Yakovlev, Tsetlin developed theories, algorithms, and applications that solved problems across fields from engineering to economics, sociology and medicine. Despite his early death in 1966, the research first spurred by Tsetlin branched out into various fields including control systems and circuit design. But the AI element of the Tsetlin approach to AI laid dormant—until now.

How Tsetlin Machines work

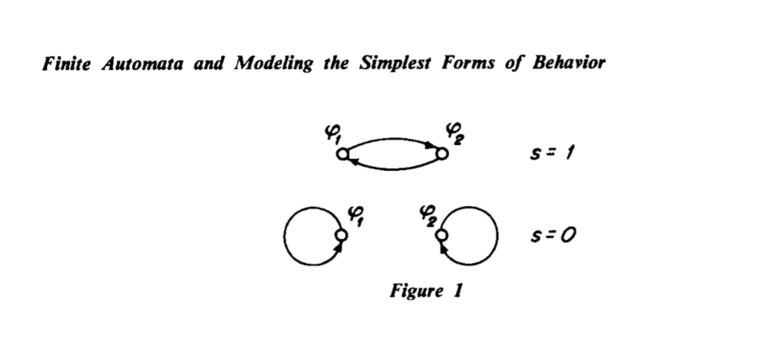

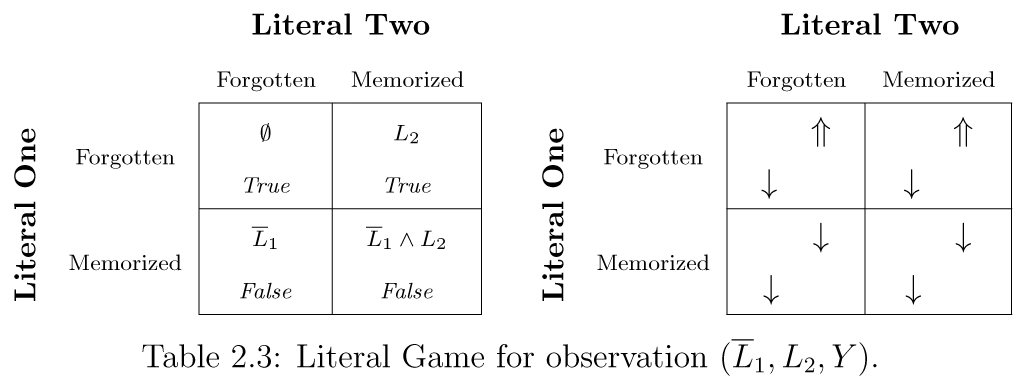

A Tsetlin machine is a field of machine learning algorithms, and associated computational architectures, that uses the principles of learning automata, called Tsetlin automata, and game theory to create logic propositions for the classification of data obtained from the environment surrounding this machine. These automata configure connections between input literals, representing the features in the input data, and propositions that are used to produce classification decisions. Then, on the basis of the detection whether the decisions were correct or erroneous, the feedback logic calculates penalties and rewards to the automata.