Train the best models for edge AI

Use Literal Labs’ AI model training platform to build, test & deploy logic-based, LBN AI models that run over 50x faster and use over 50x less energy than neural networks.

From data ingestion to deployment, our platform automates and guides you through the entire process of training and deploying your own, fully-custom, logic-based AI models.

How it gives you more

GPUs and accelerators aren’t the future. They’re the bottleneck. Literal Labs forges fast, efficient, explainable, logic-based AI models from your data, enabling accurate, accelerator free AI performance everywhere from the battery-powered edge to cloud servers.

AI can’t scale if every model requires specialised hardware. We remove that barrier with logic-based networks. Faster, smaller, and explainable by design, they run efficiently on CPUs and microcontrollers, allowing you to avoid the GPU tax altogether.

Original, not just optimisation

LBNs aren’t an optimisation service. They’re high-speed, low-energy, explainable, logic-based AI models built from the ground up using Literal Labs’ exclusive architecture and algorithms. Most model training tools tweak what already exists. We craft what never did — an entirely new class of model, designed to perform where others can’t.

Learn more +

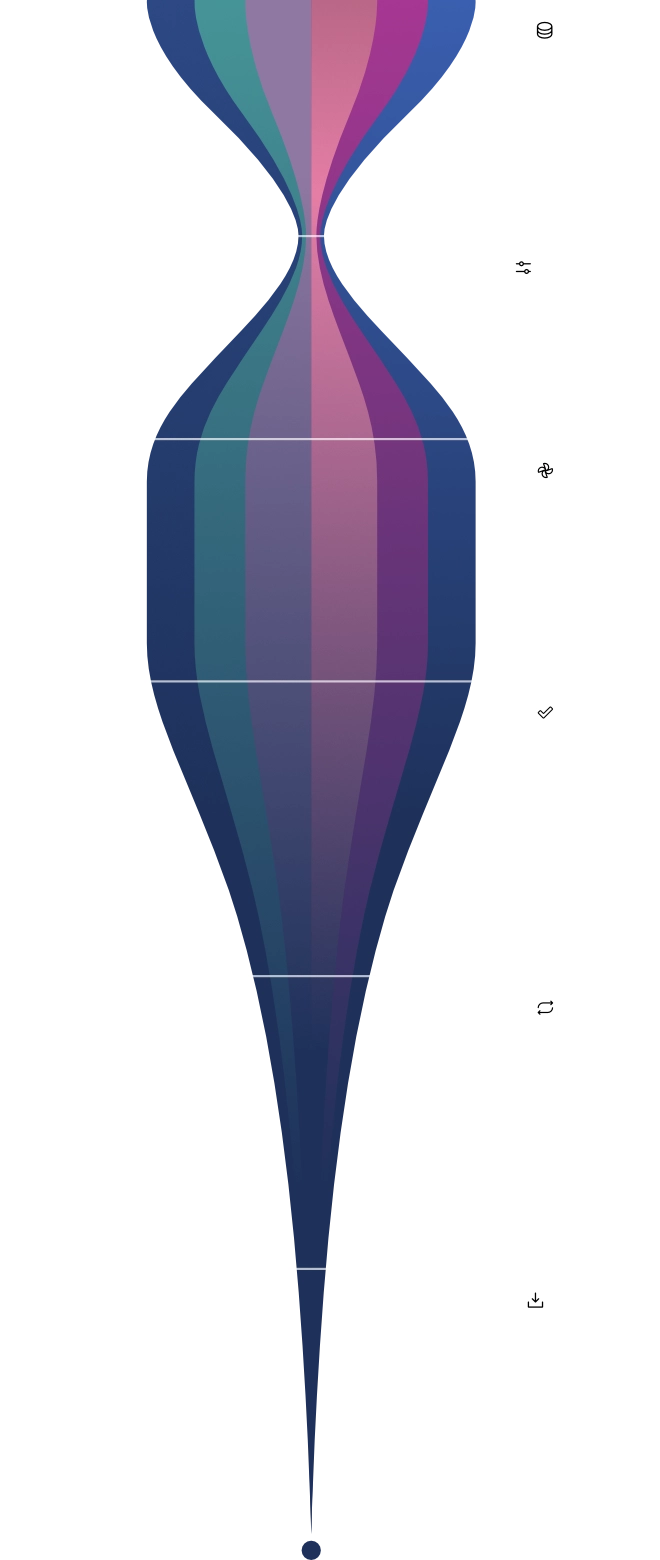

A billion to one

Literal Labs’ platform doesn’t stop at trial and error. It intelligently tests thousands of logic-based network configurations and performs billions of calculations to refine them. The result: one model, perfectly trained on your data, and for your deployment and performance needs. Precision forged at scale.

Learn more +AI without the GPUs

High performance shouldn’t demand racks of accelerators or spiralling cloud bills. LBNs run on microcontrollers, CPUs, and standard servers. They’re small enough for IoT, strong enough for enterprise. Already optimised, they require no GPUs, TPUs, or custom hardware. Deploy intelligence, not hardware bills.

Learn more +

Accuracy without excess

The average LBN model is less than 40kB, and without sacrificing accuracy. Benchmarking shows that they’re trained with only ±2% accuracy difference compared to larger, resource-hungry AI algorithms. Small in memory, LBNs are sharp in performance.

Learn more +GUI when you want, API when you don’t, seamlessly

You don’t need a large engineering team to get results. Literal Labs’ platform simplifies and accelerates training, benchmarking, and deployment in-browser or through API, so you can fit it seamlessly into the way you work best.

AI assisted configurations

A billion models to one. The platform performs billions of calculations and tests, and benchmarks thousands of models, all to help you find the single most accurate and performative model for your chosen deployment. And it does it automatically and intelligently, making the decisions that an AI engineer would, to guide your model speedily towards completion.

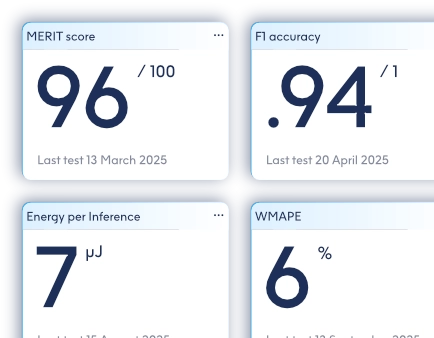

Benchmark without guesswork

Stop wasting time on trial-and-error benchmarking. The platform automates the process, comparing candidate configurations and models directly against your data and deployment constraints. The result: clear performance metrics without the endless grind. Automated benchmarking. No second-guessing.

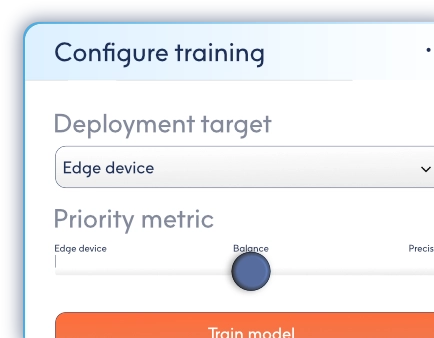

From data to deploy, all in browser

Upload a dataset. Define your deployment target. Watch an LBN train. And then deploy it. The platform streamlines the process so that anyone can create efficient AI models in a browser tab or through their existing workflow via API. No complex installs. No GPU farm. Just data in, model out.

Simple model retraining

Today’s perfect model might need refinement tomorrow. The platform makes retraining simple: upload fresh data and it can automate improving your existing models. Browser or script, it adapts to your workflow. Continuous improvement, without the overhead.

Fine tune further

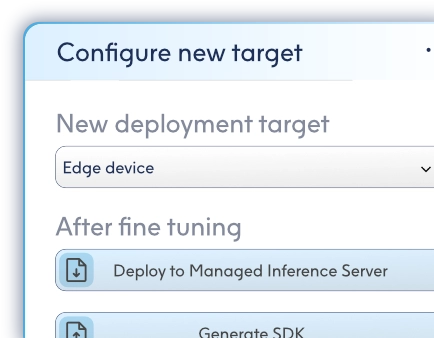

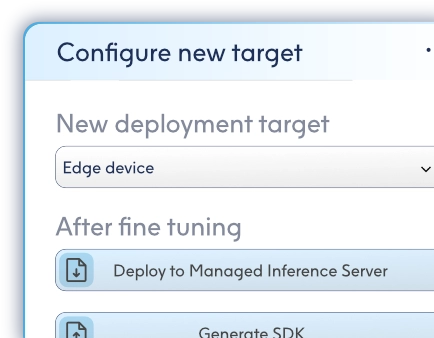

Once it has crafted your model the platform can still refine it further. Adjust deployment parameters, push for lower energy, or tweak any accuracy trade-offs. The platform intelligently adapts, re-tuning the LBN to your exact requirements. Fine-tuning, without the fuss.

Extensive API for workflow integration (coming)

Automate your model training at scale or deeply integrate it with your existing workflows and pipelines through Literal Labs’ extensive API. Browser simplicity for some, code-level integration for others. Coming 2026 — get notified when it’s ready.

API_URL = "https://www.literal-labs.ai/api/"

TOKEN = "your_api_token"

resp = requests.get(API_URL, headers={"Authorisation": f"Bearer {TOKEN}"})

print(resp.status_code, resp.text[:500])

AI that performs where ever you need it

Deployment should be simple. On microcontrollers. On servers. On anything in between.

With Literal Labs, deployment is simple. LBNs are small enough for IoT devices, efficient enough for even battery-powered edge computing, and accurate enough for server workloads. One platform, countless deployment options.

Embed on edge

Small and silicon-agnostic. LBNs embed on everything from coin-cell sensors to MCUs, running in C++ across Arm, RISC-V, PowerPC, and x86 architectures from any maker.

Execute on server

CPU-driven, compute-reduced, and cloud-ready. Deploy your custom LBN to a Managed Inference Server, stream data to it, and receive back predictions — no GPUs, no excess.

Case studies and benchmarks

Be early to logic-based AI

Join early access to the platform used to train logic-based AI that runs faster, uses less energy, and fits the hardware you already have.

Frequently asked questions

How do I train an AI model using Literal Labs?

Training LBN models with Literal Labs is designed to be straightforward. Upload your dataset to the platform via the browser-based interface or API, configure a small number of training options, and start training. The platform handles data preparation, model training, benchmarking, and optimisation automatically. Once complete, your trained model is ready for deployment to your chosen environment.

What types of use cases does the platform support today?

It's currently focussed on industrial and operational AI use cases, including anomaly detection, predictive maintenance, time-series forecasting, sensor analytics, and decision intelligence. These are problems where reliability, efficiency, and explainability matter as much as raw accuracy. Support continues to expand as new model types and capabilities are released.

Do I need AI or data science expertise to use the platform?

No. The platform is built to be usable by teams without dedicated AI or data science specialists. Most workflows can be completed through the guided web interface with minimal configuration. For engineering teams that want deeper control, the API provides advanced options and tighter integration, but this is entirely optional.

Do I need GPUs or specialised hardware to use it?

No. Model training is handled by Literal Labs' managed infrastructure, and trained models do not require GPUs or accelerators to run. Models produced by the platform are designed to operate efficiently on standard CPUs, microcontrollers, and edge devices, as well as on servers.

What infrastructure do I need to get started?

Very little. To begin, you only need a supported dataset and a web browser. There is no requirement to provision training infrastructure, manage clusters, or install complex toolchains. Deployment targets can range from embedded devices to cloud servers, depending on your use case.

How does Literal Labs differ from traditional ML platforms?

Traditional ML platforms are built around large, numerically intensive models that demand specialised hardware and complex operational pipelines. Literal Labs takes a different approach, producing compact, efficient models optimised for real-world deployment. The result is faster training, simpler deployment, lower energy consumption, and models that are easier to understand and maintain.

How do I get access, pricing, or start a pilot?

You can request access or discuss pricing directly by contacting us. The team offers guided pilots for companies that want to evaluate the platform on real data and real deployment targets. This allows you to assess performance, integration effort, and business value before committing further.